A high school student was handcuffed and searched after an AI-powered security system identified his empty bag of chips as a possible firearm.

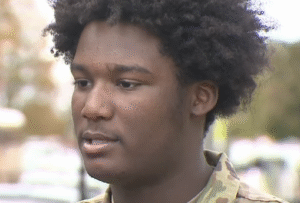

Last Monday evening, Kenwood High School student Taki Allen, 16, was told to get down on his knees, put his hands behind his back and was handcuffed after the AI-gun detection system Omnilert sent out an alert that someone on the campus had “a weapon.”

Per the school’s principal, Principal Kate Smith, the security department canceled the alert after determining there was no threat. Smith, however, did not realize the alert had been canceled, so she reported it to the school resource officer, who, in turn, called the police.

Upon police arrival, Allen was searched. When they did not find a firearm, they walked over to where the student had previously been and found the Doritos bag that he was holding on the ground.

Speaking to local news source WBAL, Allen recalled the moment when “eight cop cars’ arrived on the scene.

“The first thing I was wondering was, was I about to die? Because they had a gun pointed at me,” said Allen via WBAL. “I was just holding a Doritos bag — it was two hands and one finger out, and they said it looked like a gun.”

The latest situation comes as AI-powered security systems continue to be implemented in society.

Omnilert alone is expanding to 50 schools across the U.S. through its Secure Schools Grant Program. Earlier this year, the system was questioned for its inability to detect the presence of a school shooter in Antioch High School. On Jan. 22, the AI technology failed to pick up the weapon of the shooter due to it not being visible. One classmate was killed and another was wounded as a result. Per the Omnilert CEO, Dave Fraser, the software is relatively new and has flaws at times.

Several organizations, including the American Civil Liberties Union, have called upon security and police forces to halt the use of AI, specifically generative AI. In recent years, departments have begun using AI to transcribe audio as well as detect faces using facial recognition. The Aurora Police Department became the latest department to incorporate facial recognition technology after the city council approved the measures.

According to the ACLU, the use of large AI models can create issues when they do not work as well. Previous research has already found that women and BIPOC individuals are most often misidentified using these tools.

“If LVMs remain unreliable, but just reliable enough that people depend on them and don’t double-check that results are accurate, that could lead to false accusations and other injustices in security contexts,” said the ACLU. “But to the extent it becomes more intelligent, that will also allow for more and richer information to be collected about people, and for people to be scrutinized, monitored, and subjectively judged in more and more contexts.”

Following the Kenwood High School alert, Baltimore County Councilman Julian Jones publicly called for the review of Omnilert, urging the security department to ensure that “there are safeguards in place, so this type of error does not happen again.”